Python Serial Communication Library

Arduino and Raspberry Pi Serial Communication Code and Life. Todays the last day of my summer holiday, and I had some free time on my hands. So I decided to see if I could get my Arduino Uno and Raspberry Pi to talk to each other. It turned out the task was even easier than my previous Pi to RS 2. Python Serial Communication Library' title='Python Serial Communication Library' />

Ohm resistors to form a voltage divider between Arduino TX pin and Pi RX pin Arduino understands Pis 3. V signal levels just fine so Pi TX to Arduino RX needed no voltage shifting at all. IMPORTANT UPDATE It turns out that the RX pin on the Arduino is held at 5. V even when that pin is not initialized. I suspect it is due to the fact that the Arduino is programmed via these same pins every time you flash it from Arduino IDE, and there are external weak pullups to keep the lines to 5. V at other times. So the method described below may be risky I suggest either add a resistor in series to the RX pin, or use a proper level converter see this post for details how to accomplish that. And if you do try the method below, never connect the Pi to Arduino RX pin before you have already flashed the program to Arduino, otherwise you may end up with a damaged Pi Setting Raspberry Pi up for serial communications. In order to use the Pis serial port for anything else than as a console, you first need to disable getty the program that displays login seen by commenting the serial line out of Pis etcinittab. Line below commented out. RTS-Control-Ubuntu-Python2.7.jpeg' alt='Python Serial Communication Library' title='Python Serial Communication Library' />

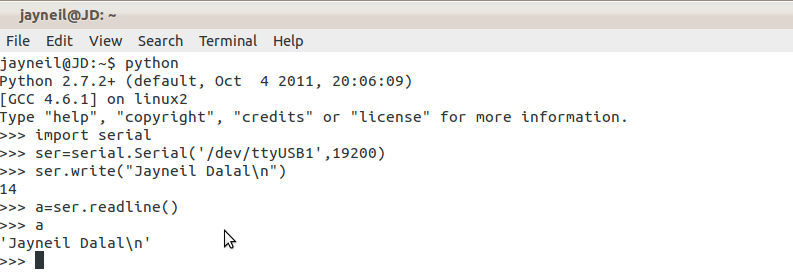

Ohm resistors to form a voltage divider between Arduino TX pin and Pi RX pin Arduino understands Pis 3. V signal levels just fine so Pi TX to Arduino RX needed no voltage shifting at all. IMPORTANT UPDATE It turns out that the RX pin on the Arduino is held at 5. V even when that pin is not initialized. I suspect it is due to the fact that the Arduino is programmed via these same pins every time you flash it from Arduino IDE, and there are external weak pullups to keep the lines to 5. V at other times. So the method described below may be risky I suggest either add a resistor in series to the RX pin, or use a proper level converter see this post for details how to accomplish that. And if you do try the method below, never connect the Pi to Arduino RX pin before you have already flashed the program to Arduino, otherwise you may end up with a damaged Pi Setting Raspberry Pi up for serial communications. In order to use the Pis serial port for anything else than as a console, you first need to disable getty the program that displays login seen by commenting the serial line out of Pis etcinittab. Line below commented out. RTS-Control-Ubuntu-Python2.7.jpeg' alt='Python Serial Communication Library' title='Python Serial Communication Library' /> We will illustrate how to integrate SparkR with R to solve some typical data science problems from a traditional R users perspective. Joonas Pihlajamaa. Coding since 1990 in Basic, CC, Perl, Java, PHP, Ruby and Python, to name a few. Also interested in math, movies, anime, and the occasional. L tty. AMA0 1. 15. If you dont want the Pi sending stuff over the serial line when it boots, you can also remove the statements consoletty. AMA0,1. 15. 20. 0 and kgdboctty. Continuing from my previous blog post about HiLink HLKRM04 module, I have finally received the ESP8266 SerialtoWiFi module that Ive been waiting for. As I said. A beginners tutorial for getting started quickly with the open source facebook c sdk library titiled FacebookSDK for facebook application development. Arduino is fantastic as an intermediary between your computer and a raw electronic circuit. Using the serial interface, you can retrieve information from sensors. AMA0,1. 15. 20. 0 from bootcmdline. Youll need to reboot the Pi in order for the changes to take effect. If you have a 3. 3. V compatible serial adapter in your computer or a MAX3. RS 2. 32 adapter, in which case see my tutorial on building one, its a good time to try out if everything is working on the Pi side. Install minicom sudo apt get install minicom and attach is to serial terminal. D devtty. AMA0. Then open Putty or similar serial terminal on PC side. Everything you type into minicom should appear in the serial terminal on PC side, and characters typed to serial terminal should appear on minicom. Connecting Arduino Uno to Raspberry Pi. Theres basically two ways to link the Arduino to the Pi. Easier route would be to just plug Arduino into the Pi via USB. The USB to serial bridge should be automatically recognized and be available at devtty. ACM0. But if you want to do it the hard way like I did, you can also connect the Pi GPIO pins seen on the right, 3. V not needed this time to Arduino Connect grounds of both devices triple check first that they are the groundsConnect Pi TX to Arduino RX series resistor recommended, only connect after flashing, never flash with Pi connectedConnect Arduino TX to Pi RX via a voltage devider. As a voltage divider, I used a 1 k. Ohm resistor between the Arduino TX and Pi RX, and another 1 k. Sketchup Housebuilder. Ohm between Pi RX and ground. That way, the 5. V Arduino signal voltage is effectively halved. Connect the resistor ladder first, and then the Pi RX between the two resistors, so theres at no point a voltage over 3. Pi You can see the connection in action here. Communication between Pi and Uno. For Rasp. Pi side, Id recommend minicom see the command line above for testing, and py. Serial sudo apt get install python serial for interaction. Using Python, you can easily make the Pi do lots of interesting things when commands are received from Arduino side. Heres a simple ROT 1. GPIO serial interface. ABCDEFGHIJKLMabcdefghijklm. NOPQRSTUVWXYZnopqrstuvwxyz. NOPQRSTUVWXYZnopqrstuvwxyz. ABCDEFGHIJKLMabcdefghijklm. Serialdevtty. AMA0,9. True. Keyboard. Interrupt. For the Arduino, you can you the serial capabilities of Arduino environment. Heres a simple program that echoes back everything that is sent from Pi to Arduino. Serial. begin9. 60. Byte. ifSerial. available 0. Byte Serial. read. Serial. writeincoming. Byte. Note that you shouldnt use these programs together, or nothing happens and you wont see anything either Pi side programs can be tested with a PC serial adapter and Putty, and Arduino side programs with minicom running in the Pi. Thats it for this time. Now that I have the two things communicating, I think Ill do something useful with the link next. Maybe a simple Arduino HDMI shield using Rasp. Integrate Spark. R and R for Better Data Science Workflow. R is one of the primary programming languages for data science with more than 1. R is an open source software that is widely taught in colleges and universities as part of statistics and computer science curriculum. R uses data frame as the API which makes data manipulation convenient. R has powerful visualization infrastructure, which lets data scientists interpret data efficiently. However, data analysis using R is limited by the amount of memory available on a single machine and further as R is single threaded it is often impractical to use R on large datasets. To address Rs scalability issue, the Spark community developed Spark. R package which is based on a distributed data frame that enables structured data processing with a syntax familiar to R users. Spark provides distributed processing engine, data source, off memory data structures. R provides a dynamic environment, interactivity, packages, visualization. Spark. R combines the advantages of both Spark and R. In the following section, we will illustrate how to integrate Spark. R with R to solve some typical data science problems from a traditional R users perspective. Spark. R Architecture. Spark. Rs architecture consists of two main components as shown in this figure an R to JVM binding on the driver that allows R programs to submit jobs to a Spark cluster and support for running R on the Spark executors. Operations executed on Spark. R Data. Frames get automatically distributed across all the nodes available on the Spark cluster. We use the socket based API to invoke functions on the JVM from R. They are supported across platforms and are available without using any external libraries in both languages. As most of the messages being passed are control messages, the cost of using sockets as compared other in process communication methods is not very high. Data Science Workflow. Data science is an exciting discipline that allows you to turn raw data into knowledge. For an R user, a typical data science project looks something like this First, you must import your data into R. This typically means that you take data stored in a file, database, cloud storage or web API, and load it into a dataframe in R. Once youve imported your data, it is a good idea to clean it up it. The real life dataset contains redundant rowscolumns and missing values, well need multiple steps to resolve these issues. Once you have cleaned up the data, a common step is to transform it with selectfilterarrangejoingroup. By operators. Together, data cleanup and transformation are called data wrangling, because getting your data in a form thats suitable to work with often feels like a fight Once you have rationalized the data with the rowscolumns you need, there are two main engines of knowledge generation visualization and modeling. These have complementary strengths and weaknesses so any real analysis will iterate between them many times. As the amount of data continues to grow, data scientists use similar workflow to solve problems but with additional revolutionized tools such as Spark. R. However, Spark. R cant cover all features that R can do, and its also unnecessary to do so because not all features need scalability and not every dataset is large. For example, if you have 1 billion records, you maybe not need to train with the full dataset if your model is as simple as logistic regression with dozens of features, but a random forest classifier with thousands of features may benefit from more data. We should use the right tool at the right place. In the following section, we will illustrate the typical scenarios and best practice of integrating Spark. R and R. Spark. R R for Typical Data Science Workflow. The adoption of Spark. R can overcome the mainly scalable issue of single machine R and accelerate the data science workflow. Big Data, Small Learning. Users typically start with a large dataset that is stored as JSON, CSV, ORC or parquet files on HDFS, AWS S3, or RDBMS data source. Especially the growing adoption of cloud based big data infrastructure. Data science begins by joining the required datasets and then Performing data cleaning operations to remove invalid rows or columns. Selecting specific rows or columns. Following these users typically aggregate or sample their data, this step reduces the size of the dataset. We usually call these steps data wrangling, it involves manipulating a large dataset and Spark. R is the most appropriate tool to handle this workload. Then the pre processed data is collected into local and used for building models or performing other statistical tasks by single machine R. A typical data scientist should be familiar with this and can benefit from thousands of CRAN packages. As a data scientist, we usually perform exploratory data analysis and manipulation with the popular dplyr package previously. In big data era, Spark. R provides the same functions with basically coincide API to handle larger scale dataset. For traditional R users, it should be a very smooth migration. We will use the famous airlines datasethttp stat computing. We first use functionality from the Spark. R Data. Frame API to apply some preprocessing to the input. Vb Net Serialization Json. As part of the preprocessing, we decide to drop rows containing null values by applying dropna. Schematrue planes lt read. Schematrue joined lt joinairlines, planes, airlinesTail. Num planestailnum df. Distance, Arr. Delay, Dep. Delay df. 2 lt dropnadf. TRUE, 0. 1headdf. Aircrafttype Distance Arr. Delay Dep. Delay. Fixed Wing Multi Engine. Fixed Wing Multi Engine. Fixed Wing Multi Engine. Fixed Wing Multi Engine. Fixed Wing Multi Engine. Fixed Wing Multi Engine. After data wrangling, users typically aggregate or sample their data, this step reduces the size of the dataset. Naturally, the question arises whether sampling decreases the performance of a model significantly. In many use cases, its acceptable. For other cases, we will discuss how to handle it in section large scale machine learning. Spark. R Data. Frame inherits all of the optimizations made to the computation engine in terms of task scheduling, code generation, memory management, etc. For example, this chart compares the runtime performance of running group by aggregation on 1. R, Python, and Scala. From the following graph, we can see that Spark. R performance similar to Scala Python. Partition aggregate. User Defined Functions UDFsParallel execution of function. Partition aggregate workflows are useful for a number of statistical applications such as ensemble learning, parameter tuning or bootstrap aggregation. In these cases, users typically have a particular function that needs to be executed in parallel across different partitions of the input dataset, and the results from each partition are then combined using an aggregation function. Spark. R provides UDFs to handle this kind of workload. Spark. R UDFs operate on each partition of the distributed Data. Frame and return local R columnar data frames that will be then converted into the corresponding format in the JVM. Compared with traditional R, using this API requires some changes to regular code with dapply. The following examples show how to add new columns of departure delay time in seconds and max actual delay time separately using Spark. R UDFs. schema lt struct. Typestruct. Fieldaircrafttype, string, struct.

We will illustrate how to integrate SparkR with R to solve some typical data science problems from a traditional R users perspective. Joonas Pihlajamaa. Coding since 1990 in Basic, CC, Perl, Java, PHP, Ruby and Python, to name a few. Also interested in math, movies, anime, and the occasional. L tty. AMA0 1. 15. If you dont want the Pi sending stuff over the serial line when it boots, you can also remove the statements consoletty. AMA0,1. 15. 20. 0 and kgdboctty. Continuing from my previous blog post about HiLink HLKRM04 module, I have finally received the ESP8266 SerialtoWiFi module that Ive been waiting for. As I said. A beginners tutorial for getting started quickly with the open source facebook c sdk library titiled FacebookSDK for facebook application development. Arduino is fantastic as an intermediary between your computer and a raw electronic circuit. Using the serial interface, you can retrieve information from sensors. AMA0,1. 15. 20. 0 from bootcmdline. Youll need to reboot the Pi in order for the changes to take effect. If you have a 3. 3. V compatible serial adapter in your computer or a MAX3. RS 2. 32 adapter, in which case see my tutorial on building one, its a good time to try out if everything is working on the Pi side. Install minicom sudo apt get install minicom and attach is to serial terminal. D devtty. AMA0. Then open Putty or similar serial terminal on PC side. Everything you type into minicom should appear in the serial terminal on PC side, and characters typed to serial terminal should appear on minicom. Connecting Arduino Uno to Raspberry Pi. Theres basically two ways to link the Arduino to the Pi. Easier route would be to just plug Arduino into the Pi via USB. The USB to serial bridge should be automatically recognized and be available at devtty. ACM0. But if you want to do it the hard way like I did, you can also connect the Pi GPIO pins seen on the right, 3. V not needed this time to Arduino Connect grounds of both devices triple check first that they are the groundsConnect Pi TX to Arduino RX series resistor recommended, only connect after flashing, never flash with Pi connectedConnect Arduino TX to Pi RX via a voltage devider. As a voltage divider, I used a 1 k. Ohm resistor between the Arduino TX and Pi RX, and another 1 k. Sketchup Housebuilder. Ohm between Pi RX and ground. That way, the 5. V Arduino signal voltage is effectively halved. Connect the resistor ladder first, and then the Pi RX between the two resistors, so theres at no point a voltage over 3. Pi You can see the connection in action here. Communication between Pi and Uno. For Rasp. Pi side, Id recommend minicom see the command line above for testing, and py. Serial sudo apt get install python serial for interaction. Using Python, you can easily make the Pi do lots of interesting things when commands are received from Arduino side. Heres a simple ROT 1. GPIO serial interface. ABCDEFGHIJKLMabcdefghijklm. NOPQRSTUVWXYZnopqrstuvwxyz. NOPQRSTUVWXYZnopqrstuvwxyz. ABCDEFGHIJKLMabcdefghijklm. Serialdevtty. AMA0,9. True. Keyboard. Interrupt. For the Arduino, you can you the serial capabilities of Arduino environment. Heres a simple program that echoes back everything that is sent from Pi to Arduino. Serial. begin9. 60. Byte. ifSerial. available 0. Byte Serial. read. Serial. writeincoming. Byte. Note that you shouldnt use these programs together, or nothing happens and you wont see anything either Pi side programs can be tested with a PC serial adapter and Putty, and Arduino side programs with minicom running in the Pi. Thats it for this time. Now that I have the two things communicating, I think Ill do something useful with the link next. Maybe a simple Arduino HDMI shield using Rasp. Integrate Spark. R and R for Better Data Science Workflow. R is one of the primary programming languages for data science with more than 1. R is an open source software that is widely taught in colleges and universities as part of statistics and computer science curriculum. R uses data frame as the API which makes data manipulation convenient. R has powerful visualization infrastructure, which lets data scientists interpret data efficiently. However, data analysis using R is limited by the amount of memory available on a single machine and further as R is single threaded it is often impractical to use R on large datasets. To address Rs scalability issue, the Spark community developed Spark. R package which is based on a distributed data frame that enables structured data processing with a syntax familiar to R users. Spark provides distributed processing engine, data source, off memory data structures. R provides a dynamic environment, interactivity, packages, visualization. Spark. R combines the advantages of both Spark and R. In the following section, we will illustrate how to integrate Spark. R with R to solve some typical data science problems from a traditional R users perspective. Spark. R Architecture. Spark. Rs architecture consists of two main components as shown in this figure an R to JVM binding on the driver that allows R programs to submit jobs to a Spark cluster and support for running R on the Spark executors. Operations executed on Spark. R Data. Frames get automatically distributed across all the nodes available on the Spark cluster. We use the socket based API to invoke functions on the JVM from R. They are supported across platforms and are available without using any external libraries in both languages. As most of the messages being passed are control messages, the cost of using sockets as compared other in process communication methods is not very high. Data Science Workflow. Data science is an exciting discipline that allows you to turn raw data into knowledge. For an R user, a typical data science project looks something like this First, you must import your data into R. This typically means that you take data stored in a file, database, cloud storage or web API, and load it into a dataframe in R. Once youve imported your data, it is a good idea to clean it up it. The real life dataset contains redundant rowscolumns and missing values, well need multiple steps to resolve these issues. Once you have cleaned up the data, a common step is to transform it with selectfilterarrangejoingroup. By operators. Together, data cleanup and transformation are called data wrangling, because getting your data in a form thats suitable to work with often feels like a fight Once you have rationalized the data with the rowscolumns you need, there are two main engines of knowledge generation visualization and modeling. These have complementary strengths and weaknesses so any real analysis will iterate between them many times. As the amount of data continues to grow, data scientists use similar workflow to solve problems but with additional revolutionized tools such as Spark. R. However, Spark. R cant cover all features that R can do, and its also unnecessary to do so because not all features need scalability and not every dataset is large. For example, if you have 1 billion records, you maybe not need to train with the full dataset if your model is as simple as logistic regression with dozens of features, but a random forest classifier with thousands of features may benefit from more data. We should use the right tool at the right place. In the following section, we will illustrate the typical scenarios and best practice of integrating Spark. R and R. Spark. R R for Typical Data Science Workflow. The adoption of Spark. R can overcome the mainly scalable issue of single machine R and accelerate the data science workflow. Big Data, Small Learning. Users typically start with a large dataset that is stored as JSON, CSV, ORC or parquet files on HDFS, AWS S3, or RDBMS data source. Especially the growing adoption of cloud based big data infrastructure. Data science begins by joining the required datasets and then Performing data cleaning operations to remove invalid rows or columns. Selecting specific rows or columns. Following these users typically aggregate or sample their data, this step reduces the size of the dataset. We usually call these steps data wrangling, it involves manipulating a large dataset and Spark. R is the most appropriate tool to handle this workload. Then the pre processed data is collected into local and used for building models or performing other statistical tasks by single machine R. A typical data scientist should be familiar with this and can benefit from thousands of CRAN packages. As a data scientist, we usually perform exploratory data analysis and manipulation with the popular dplyr package previously. In big data era, Spark. R provides the same functions with basically coincide API to handle larger scale dataset. For traditional R users, it should be a very smooth migration. We will use the famous airlines datasethttp stat computing. We first use functionality from the Spark. R Data. Frame API to apply some preprocessing to the input. Vb Net Serialization Json. As part of the preprocessing, we decide to drop rows containing null values by applying dropna. Schematrue planes lt read. Schematrue joined lt joinairlines, planes, airlinesTail. Num planestailnum df. Distance, Arr. Delay, Dep. Delay df. 2 lt dropnadf. TRUE, 0. 1headdf. Aircrafttype Distance Arr. Delay Dep. Delay. Fixed Wing Multi Engine. Fixed Wing Multi Engine. Fixed Wing Multi Engine. Fixed Wing Multi Engine. Fixed Wing Multi Engine. Fixed Wing Multi Engine. After data wrangling, users typically aggregate or sample their data, this step reduces the size of the dataset. Naturally, the question arises whether sampling decreases the performance of a model significantly. In many use cases, its acceptable. For other cases, we will discuss how to handle it in section large scale machine learning. Spark. R Data. Frame inherits all of the optimizations made to the computation engine in terms of task scheduling, code generation, memory management, etc. For example, this chart compares the runtime performance of running group by aggregation on 1. R, Python, and Scala. From the following graph, we can see that Spark. R performance similar to Scala Python. Partition aggregate. User Defined Functions UDFsParallel execution of function. Partition aggregate workflows are useful for a number of statistical applications such as ensemble learning, parameter tuning or bootstrap aggregation. In these cases, users typically have a particular function that needs to be executed in parallel across different partitions of the input dataset, and the results from each partition are then combined using an aggregation function. Spark. R provides UDFs to handle this kind of workload. Spark. R UDFs operate on each partition of the distributed Data. Frame and return local R columnar data frames that will be then converted into the corresponding format in the JVM. Compared with traditional R, using this API requires some changes to regular code with dapply. The following examples show how to add new columns of departure delay time in seconds and max actual delay time separately using Spark. R UDFs. schema lt struct. Typestruct. Fieldaircrafttype, string, struct.